How to Use DeepSeek with Ollama and Continue in VSCode

A Step-by-Step Tutorial

Prerequisites

- VSCode installed on your machine.

- Basic familiarity with terminal commands.

Step 1: Install Ollama

First, install Ollama to run AI models locally.

For macOS/Linux:

curl -fsSL https://ollama.com/install.sh | sh

For Windows (Preview):

Download the installer from Ollama.com and run it.

Start the Ollama service:

ollama serve

(Keep this terminal running in the background.)

Step 2: Download the DeepSeek Model

Pull the DeepSeek model from Ollama’s library. For example, use deepseek-coder (adjust the version as needed):

ollama pull deepseek-coder:33b-instruct-q4_K_M

(Replace with your preferred variant, e.g., 6.7b or 1.3b for lighter models.)

Step 3: Install Continue in VSCode

- Open VSCode.

- Go to Extensions (Ctrl+Shift+X / Cmd+Shift+X).

- Search for “Continue” and install it.

Alternatively, use the VSCode Command Palette (Ctrl+Shift+P / Cmd+Shift+P):

ext install continue

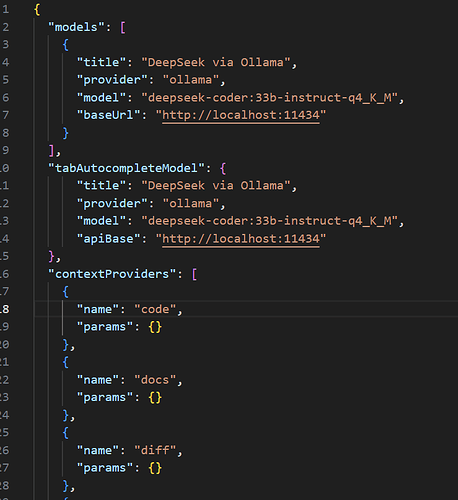

Step 4: Configure Continue to Use Ollama & DeepSeek

-

Open the Continue configuration file in VSCode:

- Press

Ctrl+Shift+P(orCmd+Shift+Pon macOS). - Search for “Continue: Open config.json” and select it.

- Press

-

Add Ollama as a model provider and specify DeepSeek:

{

"models": [

{

"title": "DeepSeek via Ollama",

"provider": "ollama",

"model": "deepseek-coder:33b-instruct-q4_K_M",

"baseUrl": "http://localhost:11434"

}

]

}

(Match the model name to the one you downloaded in Step 2.)

- Save the file (

Ctrl+S/Cmd+S).

Step 5: Test the Integration

- Create a test file (e.g.,

test.py). - Write a comment prompting DeepSeek:

# Write a function to reverse a string in Python

- Place your cursor on the comment line and press

Ctrl+Shift+I(orCmd+Shift+Ion macOS) to trigger Continue. - DeepSeek will generate code suggestions!

Troubleshooting Tips

- Ollama Isn’t Running: Ensure

ollama serveis running in the background. - Model Not Found: Double-check the model name in

config.jsonand ensure you’ve pulled it viaollama pull. - Slow Responses: Use smaller DeepSeek variants (e.g.,

1.3b) or upgrade hardware.

By following these steps, you’ve transformed VSCode into an AI-powered IDE with DeepSeek and Ollama! ![]()

For more details, visit the Continue Documentation or Ollama’s Blog.

!

!