Introduction

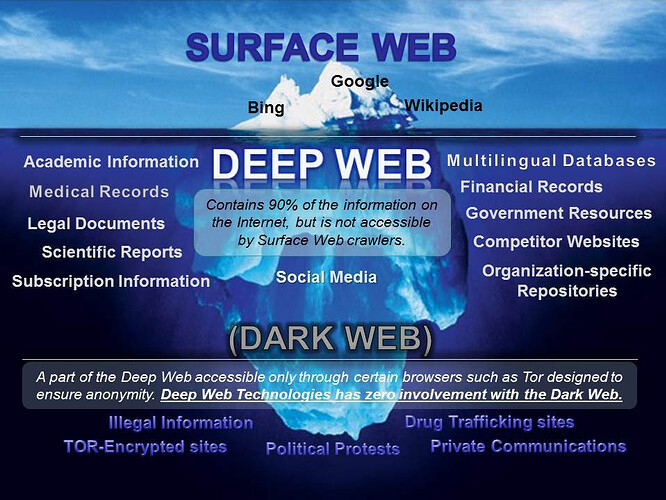

The dark web is being used more and more for illicit activity by nefarious actors. It’s becoming easier to access as knowledge of tor becomes more mainstream and cryptocurrencies provide a means to monetize it. Also, just like the clearnet, it only gets larger with time. Encrypted messaging apps allow for people to exchange hidden onion webpages without risk of exposure to authorities. As OSINT investigators, collectors, and analysts, we have to be familiar with the dark web and be able to navigate it with efficiency. We also have to be able to extract information from it in a targeted, efficient matter. Here are a few tools you can use to get you started, get you familiar, and get your comfortable in the difficult task of inspecting the dark web.

Hunchly Dark Web

If you’re looking for a low-tech solution for data basing sources for dark web research, Hunchly Daily Dark Web Reports are probably a good place to start. There’s two ways of going about this. You can either subscribe via email on the Hunchly website, or you can simply follow for daily posts on the Hunchly Twitter page. It’s important to note that this is just a discovery tool. Hunchly explicitly states they do not analyze the hidden services for content. The links you receive could lead to drug markets, child pornography, malware, or other sensitive content. Hunchly is not responsible for the dissemination of such content; use at your own discretion.

Dark Search

Another low-tech solution is one that has been making the news lately. Sector035 reported on it in Week in OSINT and others have mentioned in on the Twittersphere. It’s called DarkSearch. It seems to be a reliable dark web search engine with the ability to use advanced search operators. You can view this search engine on any web browser but you will only be able to follow the links found in its index by using Tor or similar. While their search operators are not as robust as Google Dorks, you can achieve quite specific results with the ones they offer. I really like the boost operator (^) that allows you to emphasize a term in your search query. If the developers are reading this and my request is possible, I’d like to know there is a way to specify date ranges of results; I would like to not have to open every single search result to discover the age of the post!

TorBot

If you’re looking for an advanced tool for dark web research, TorBot probably is and will continue to be overkill. As of this writing, the last update to TorBot was in February. It uses Python 3.x and requires a Tor dependency. TorBot has a list of features that makes it useful for multiple applications. Features include:

- Onion Crawler (.onion).(Completed)

- Returns Page title and address with a short description about the site.(Partially Completed)

- Save links to database.(PR to be reviewed)

- Get emails from site.(Completed)

- Save crawl info to JSON file.(Completed)

- Crawl custom domains.(Completed)

- Check if the link is live.(Completed)

- Built-in Updater.(Completed)

- Visualizer module.(Not started)

- Social Media integration.(not Started) …(will be updated)

What I appreciate about TorBot is how ambitious the project is. There is a laundry list of promised features that are currently being worked on that are very exciting including:

- Visualization Module

- Implement BFS Search for webcrawler

- Multithreading for Get Links

- Improve stability (Handle errors gracefully, expand test coverage and etc.)

- Create a user-friendly GUI

- Randomize Tor Connection (Random Header and Identity)

- Keyword/Phrase search

- Social Media Integration

- Increase anonymity and efficiency

Make sure to put in the time to get this script running.

Fresh Onions

Fresh Onions is a tool that hasn’t been updated in a while. As a disclaimer, you may have issues running the script as 2017 was the last GitHub push. However, even as an academic piece of what is possible on the dark web using Python, it’s worth taking a look at what features this tool offers or once offered. Here’s a list of the features:

- Crawls the darknet looking for new hidden service

- Find hidden services from a number of clearnet sources

- Optional fulltext elasticsearch support

- Marks clone sites of the /r/darknet superlist

- Finds SSH fingerprints across hidden services

- Finds email addresses across hidden services

- Finds bitcoin addresses across hidden services

- Shows incoming / outgoing links to onion domains

- Up-to-date alive / dead hidden service status

- Portscanner

- Search for “interesting” URL paths, useful 404 detection

- Automatic language detection

- Fuzzy clone detection (requires elasticsearch, more advanced than superlist clone detection)

- Doesn’t fuck around in general.

That last feature is very important. Now, this spider is hosted on the dark web and the developer has provided the onion URL. You can view it on the GitHub repo. If you can get this tool to work, you can combine it with other databasing tools like the ones previously discussed to create your own archive. I don’t have a ton of experience with this tool, though many in the OSINT community have commented about its value. Make sure to check it out as you sift through this list!

Onioff

Once you’ve created a database of hidden services and onion domains in tor, you need to inspect them to prevent from exposing yourself to malicious material or worse. Onioff is an onion url inspector for deep web links. The developer was kind enough to create a demo (so I don’t have to!). It’s also worth noting that the developer is a high school student. His other projects include dcipher, caesar, and kickthemout. All are pretty awesome and high-level stuff.

Make sure you know what you’re about to open before you open a link!

TorCrawl

Now if you’re looking for a powerful, robust tool that has a really good wiki, I’m bring up the rear with TorCrawl. TorCrawl not only crawls hidden services on tor, it extracts the code on the services’ webpage. Installation is pretty standard, clone the git and install the requirements with pip; however, if you don’t already have the tor service installed, the wiki provides a link to instructions on how to do that. If you’ve made it this far without doing that already, bravo.

So, what is this useful for? In a world with infinite time, you could setup and run TorBot, figure out how to get everything running, and have a reliable tool that will consistently get new DLCs. In a semi perfect world you’d have the time to database services with subscriptions, manual tools, and Fresh Onions, then inspect each onion webpage for possible malicious content, then manually inspect each page for your investigation. But it’s not a perfect world and in most cases, the Pareto Principle applies and you have to get the most amount of work done in the least amount of time. So instead of worrying about crawling, inspection, then investigation, do it all in one with TorBot. You get the webpage markup so you can view the content without having to physically access the page. You can also view the static webpage by saving it as an .html file.

Check out TorCrawl and see which process you prefer. I haven’t spent enough time to consistently compare each tool/process nor have I seen how each tool performs over time. As of this writing, TorCrawl was last updated 25 days ago. It’s by far the most recently updated tool which gives me even more confidence in the tool and developer.

!

!