Long-time Slashdot reader Artem S. Tashkinov shares a blog post from indie game programmer who complains “The special upload tool I had to use today was a total of 230MB of client files, and involved 2,700 different files to manage this process.”

Oh and BTW it gives error messages and right now, it doesn’t work. sigh.

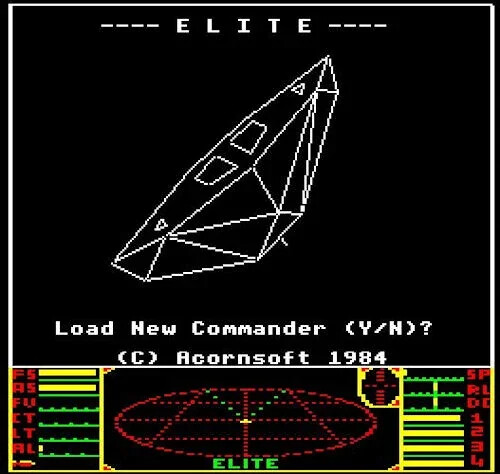

I’ve seen coders do this. I know how this happens. It happens because not only are the coders not doing low-level, efficient code to achieve their goal, they have never even SEEN low level, efficient, well written code. How can we expect them to do anything better when they do not even understand that it is possible…? It’s what they learned. They have no idea what high performance or constraint-based development is…

Computers are so fast these days that you should be able to consider them absolute magic. Everything that you could possibly imagine should happen between the 60ths of a second of the refresh rate. And yet, when I click the volume icon on my microsoft surface laptop (pretty new), there is a VISIBLE DELAY as the machine gradually builds up a new user interface element, and eventually works out what icons to draw and has them pop-in and they go live. It takes ACTUAL TIME. I suspect a half second, which in CPU time, is like a billion fucking years…

All I’m doing is typing this blog post. Windows has 102 background processes running. My nvidia graphics card currently has 6 of them, and some of those have sub tasks. To do what? I’m not running a game right now, I’m using about the same feature set from a video card driver as I would have done TWENTY years ago, but 6 processes are required. Microsoft edge web view has 6 processes too, as does Microsoft edge too. I don’t even use Microsoft edge. I think I opened an SVG file in it yesterday, and here we are, another 12 useless pieces of code wasting memory, and probably polling the cpu as well.

This is utter, utter madness. Its why nothing seems to work, why everything is slow, why you need a new phone every year, and a new TV to load those bloated streaming apps, that also must be running code this bad. I honestly think its only going to get worse, because the big dumb, useless tech companies like facebook, twitter, reddit, etc are the worst possible examples of this trend…

There was a golden age of programming, back when you had actual limitations on memory and CPU. Now we just live in an ultra-wasteful pit of inefficiency. Its just sad.

Long-time Slashdot reader Z00L00K left a comment arguing that “All this is because everyone today programs on huge frameworks that have everything including two full size kitchen sinks, one for right handed people and one for left handed.” But in another comment Slashdot reader youn blames code generators, cut-and-paste programming, and the need to support multiple platforms.

But youn adds that even with that said, “In the old days, there was a lot more blue screens of death… Sure it still happens but how often do you restart your computer these days.” And they also submitted this list arguing “There’s a lot more functionality than before.”

- Some software has been around a long time. Even though the /. crowd likes to bash Windows, you got to admit backward compatibility is outstanding

- A lot of things like security were not taken in consideration

- It’s a different computing environment… multi tasking, internet, GPUs

- In the old days, there was one task running all the time. Today, a lot of error handling, soft failures if the app is put to sleep

- A lot of code is due to to software interacting one with another, compatibility with standards

- Shiny technology like microservices allow scaling, heterogenous integration

So who’s right and who’s wrong? Leave your own best answers in the comments.

And are today’s programmers leaving too much code bloat?

!

!